Operating Open vSwitch brings a new set of challenges.

One of those challenges is managing Open vSwitch itself and making sure you’re up to date with performance and stability fixes. For example, in late 2013 there were significant performance improvements with the release of 1.11 ( flow wildcarding!) and in the 2.x series there are even more improvements coming.

This means everyone running those old versions of OVS (I’m looking at you, <=1.6) should upgrade and get these huge performance gains.

There are a few things to be aware of when upgrading OVS:

- Reloading the kernel module is a data plane impacting event. It’s minimal. Most won’t notice, and the ones that do only see a quick blip. The duration of the interruption is a function of the number of ports and number of flows before the upgrade.

- Along those lines, if you orchestrate OVS kernel module reloads with parallel-ssh or Ansible or really any other tool, be mindful of the connection timeouts. All traffic on the host will be momentarily dropped, including your SSH connection! Set your SSH timeouts appropriately or bad things happen!

- Pay very close attention to kernel upgrades and OVS kernel module upgrades. Failure to do so could mean your host networking does not survive a reboot!

- Some OVS related changes you’ve made to objects OVS manages outside of OVS/OVSdb, e.g., manual setup of tc buckets will be destroyed.

- If you use XenServer, by upgrading OVS beyond what’s delivered from Citrix directly, you’re likely unsupported.

Here is a rough outline of the OVS upgrade process for an individual hypervisor:

- Obtain Open vSwitch packages

- Install Open vSwitch userspace components, kernel module(s) (see #3 and “Where things can really go awry”)

- Load new Open vSwitch kernel module (/etc/init.d/openvswitch force-kmod-reload)

- Simplified Ansible Playbook: https://gist.github.com/andyhky/9983421

The INSTALL file provides more detailed upgrade instructions. In the old days, upgrading Open vSwitch meant you had to either reboot your host or rebuild all of your flows because of the kernel module reload. After the introduction of the kernel module reloads, the upgrade process is more durable and less impacting.

Where things can really go awry

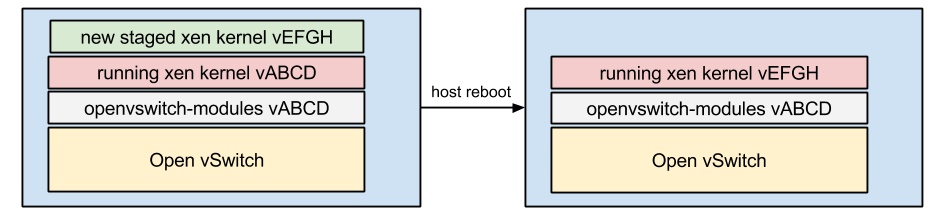

If your OS has a new kernel pending, e.g., after a XenServer service pack, you will want to install the packages for both your running kernel module and the one which will be running after reboot. Failing to do so can result in losing connectivity to your machine.

It is not a guaranteed loss of networking when the Open vSwitch kernel module doesn’t match the xen kernel module, but it is a best practice to ensure they are in lock-step. The cases I’ve seen happen are usually significant version changes, e.g., 1.6 -> 1.11.

You can check if you’re likely to have a problem by running this code (XenServer only, apologies for quick & dirty bash):

[code language=“bash”] #!/usr/bin/env bash RUNNING_XEN_KERNEL=`uname -r | sed s/xen//` PENDING_XEN_KERNEL=`readlink /boot/vmlinuz-2.6-xen | sed s/xen// | sed s/vmlinuz-//` OVS_BUILD=`/etc/init.d/openvswitch version | grep ovs-vswitchd | awk ‘{print $NF}’` rpm -q openvswitch-modules-xen-$RUNNING_XEN_KERNEL-$OVS_BUILD > /dev/null if [[ $? == 0 ]] then echo “Current kernel and OVS modules match” else CURRENT_MISMATCH=1 echo “Current kernel and OVS modules do not match” fi

rpm -q openvswitch-modules-xen-$PENDING_XEN_KERNEL-$OVS_BUILD > /dev/null if [[ $? == 0 ]] then echo “Pending kernel and OVS modules match” else PENDING_MISMATCH=1 echo “Pending kernel and OVS will not match after reboot. This can cause system instability.” fi

if [[ $CURRENT_MISMATCH == 1 || $PENDING_MISMATCH == 1 ]] then exit 1 fi [/code]

Luckily, this can be rolled back. Access the host via DRAC/iLO and roll back the vmlinuz-2.6-xen symlink in /boot to one that matches your installed openvswitch-modules RPM. I made a quick and dirty bash script which can roll back, but it won’t be too useful unless you put the script on the server beforehand. Here it is (again, XenServer only):

[code language=“bash”] #!/usr/bin/env bash # Not guaranteed to work. YMMV and all that. OVS_KERNEL_MODULES=`rpm -qa ‘openvswitch-modules-xen*’ | sed s/openvswitch-modules-xen-// | cut -d “-” -f1,2;` XEN_KERNELS=`find /boot -name “vmlinuz*xen” \! -type l -exec ls -ld {} + | awk ‘{print $NF}’ | cut -d “-” -f2,3 | sed s/xen//` COMMON_KERNEL_VERSION=`echo $XEN_KERNELS $OVS_KERNEL_MODULES | tr " " “\n” | sort | uniq -d` stat /boot/vmlinuz-${COMMON_KERNEL_VERSION}xen > /dev/null if [[ $? == 0 ]] then rm /boot/vmlinuz-2.6-xen ln -s /boot/vmlinuz-${COMMON_KERNEL_VERSION}xen /boot/vmlinuz-2.6-xen else echo “Unable to find kernel version to roll back to! :(:(:(:(” fi [/code]