In the physical world when you power on a server it’s already cabled (hopefully).

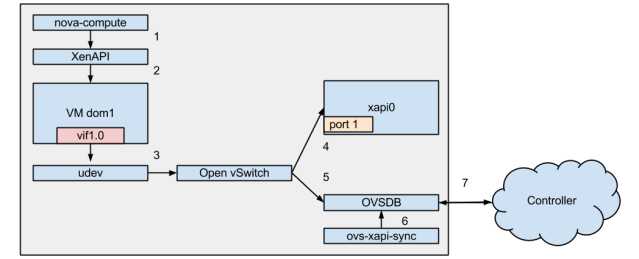

With VMs things are a bit different. Here’s the sequence of events when a VM is started in Nova and what happens on XenServer to wire it up with Open vSwitch.

- nova-compute starts the VM via XenAPI

- XenAPI VM.start creates a domain and creates the VM’s vifs on the hypervisor

- The Linux user device manager manages receives this event, and scripts within /etc/udev/rules.d are fired in lexical order

- Xen’s vif plug script is fired, which at a minimum creates a port on the relevant virtual switch

- Newer versions (XS 6.1+) of this plug script also have a setup-vif-rules script which creates several entries in the OpenFlow table (just grabbed from the code comments):

- Allow DHCP traffic (outgoing UDP on port 67)

- Filter ARP requests

- Filter ARP responses

- Allow traffic from specified ipv4 addresses

- Neighbour solicitation

- Neighbour advertisement

- Allow traffic from specified ipv6 addresses

- Drop all other neighbour discovery

- Drop other specific ICMPv6 types

- Router advertisement

- Redirect gateway

- Mobile prefix solicitation

- Mobile prefix advertisement

- Multicast router advertisement

- Multicast router solicitation

- Multicast router termination

- Drop everything else

- Newer versions (XS 6.1+) of this plug script also have a setup-vif-rules script which creates several entries in the OpenFlow table (just grabbed from the code comments):

- Creation of the port on the virtual switch also adds entries into OVSDB, the database which backs Open vSwitch.

- ovs-xapi-sync, which starts on XenAPI/Open vSwitch startup has a local copy of the system’s state in memory. It checks for changes in Bridge/Interface tables, and pulls in XenServer specific data to other columns in those tables.

- On many events within OVSDB, including create/update of tables touched in these OVSDB operations, the OVS controller is notified via JSON RPC. Thanks Scott Lowe for clarification on this part.

After all of that happens, the VM boots the guest OS sets up its network stack.